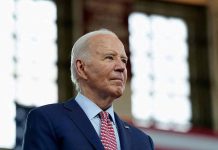

(RepublicanReport.org) – On October 30, President Joe Biden issued an executive order establishing new standards to provide safety and security for Americans regarding the expanding use of artificial intelligence (AI). Two days later, Vice President Kamala Harris announced the creation of the United States AI Safety Institute (USAISI) to develop and oversee those newly ordered measures. The Commerce Secretary recently named a director for the USAISI.

On February 7, Secretary Gina Raimondo announced the appointment of Elizabeth Kelly as director of the recently established USAISI. She currently serves in the administration as an economic policy advisor to the president. Media outlets reported that she participated in the initial draft of Biden’s executive order establishing the institute.

White House National Economic Council Director Lael Brainard noted that Kelly helps the president shape his technology and financial regulation agendas. She also reportedly “worked to [establish] broad coalitions of [tech industry] stakeholders.”

The USAISI operates through the National Institute for Standards and Technology (NIST), an agency assigned to the US Department of Commerce. In addition to developing safety and security standards, the institute will “evaluate emerging AI risks,” “provide testing environments” for researchers, create standards for “authenticating AI-generated content,” and oversee ongoing tests of AI models.

The Commerce Department confirmed that the USAISI will “leverage outside expertise” by working with other government agencies, industry leaders, and academics. Likewise, the institute will partner with similar standards agencies overseas, like the United Kingdom’s Artificial Intelligence Institute.

Additionally, the USAISI is creating a consortium to “help equip and empower the collaborative [creation] of a new measurement science” to enable the institute and its partners to identify “proven, scalable, and interoperable” metrics and techniques to promote the development and proper use of trustworthy, safe AI systems.

The USAISI Consortium’s Cooperative Research and Development Agreement requires members to contribute technical expertise to a wide range of areas. A few examples include:

- Developing benchmarks and guidance sheets for evaluating and identifying AI capabilities, particularly those showing the potential to create harm.

- Establishing tools and guidance for authenticating digital content.

- Exploring the “complexities” at the intersection of emerging AI technology and society.

The NIST accepted letters of interest from organizations interested in joining the consortium through January 15.

Copyright 2024, RepublicanReport.org